Understanding the postgres environment

Postgresql universally have 2 distinct parts. The binary and the data directory. The binary part contains Postgresql executable and the data contains the all the database (tables, indexes, pg_toasts and etc), transaction logs (WALs), postgresql.conf, indent (security).

The following are showing where the binary and data resides and the essential Postgres services.

Note: the binary and the data directories are highly custimizable. Different postgresql vendors may decide there get installed and reside. What's shown here is not a definitely path.

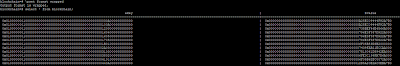

-bash-4.1$ ps -ef|egrep postgres

avahi 1791 1 0 Mar14 ? 00:00:00 avahi-daemon: running [postgres6ec-001.local]

postgres 2065 1 0 Mar14 ? 00:00:25 /usr/pgsql-9.2/bin/postmaster -p 5432 -D /var/lib/pgsql/9.2/data

postgres 2067 2065 0 Mar14 ? 00:00:00 postgres: logger process

postgres 2069 2065 0 Mar14 ? 00:00:00 postgres: checkpointer process

postgres 2070 2065 0 Mar14 ? 00:00:08 postgres: writer process

postgres 2071 2065 0 Mar14 ? 00:00:08 postgres: wal writer process

postgres 2072 2065 0 Mar14 ? 00:00:35 postgres: autovacuum launcher process

postgres 2073 2065 0 Mar14 ? 00:01:15 postgres: stats collector process

Creating basic database for testing. Roles and constraints are all omitted in this test.

-bash-4.1$ psql

psql (9.3.5, server 9.2.9)

Type "help" for help.

postgres=# create database blockchain;

CREATE DATABASE

postgres=#

Listing the database

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

------------+----------+----------+-------------+-------------+-----------------------

blockchain | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 |

template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres +

| | | | | postgres=CTc/postgres

vcdb | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =Tc/postgres +

| | | | | postgres=CTc/postgres+

| | | | | vc=CTc/postgres

(5 rows)

postgres=#

You are now connected to database "blockchain" as user "postgres".

blockchain=# \d+

No relations found.

blockchain=#

Check current connection user and db.

blockchain=# select current_user, current_database();

current_user | current_database

--------------+------------------

postgres | blockchain

(1 row)

or

blockchain-# \conninfo

You are connected to database "blockchain" as user "postgres" via socket in "/tmp" at port "5432".

--note: column data type char(250) will not be large enough. Have to use text.

create table blockchain (

akey text,

bvalue text

);

CREATE TABLE

Making sure the dump file exist in the correct path.

blockchain-# \! pwd; ls

/var/lib/pgsql/blockchain

blocktest.txt data_output data_output.txt

blockchain=# \d blockchain

Table "public.blockchain"

Column | Type | Modifiers

--------+------+-----------

key | text |

bvalue | text |

blockchain=# COPY blockchain FROM '/var/lib/pgsql/blockchain/data_output.txt' (DELIMITER(':'));

COPY 9493

blockchain=# select count(*) from blockchain;

count

-------

9493

(1 row)

\pset columns 250

\pset format wrapped

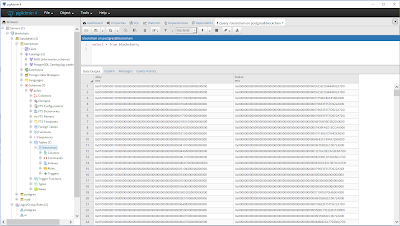

Viewing with Pg_Admin 4