2 of my VMs at the lab were ping-able but cannot ssh or telnet in. Looking at the console in the VMs, it showed the following error. Other VMs in the same ESX Host were fine just these 2 were having ssh issues.

Put the ESX host on Maintanence mode then rebooted the hosts everything came back up fine. I was told, the switch was having issue recently.

Tuesday, March 22, 2016

Friday, February 26, 2016

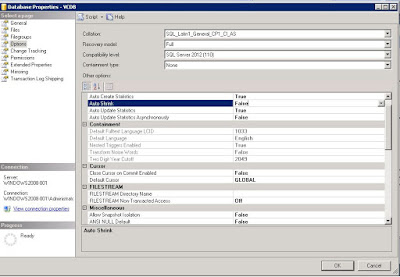

SQL Server: Why does my sql server database growth and shrink on its own ?

Got a question from a user today that his SQL Server growing and shrinking on its own. Being shallow in SQL Server for the time being. That's triggered my curiosity and starting to do some reading about it.There are features in SQL Server database for this purpose.

Get a general idea of which DB actually growing or shrinking by monitoring it for a bit.

SELECT

DB.name,

MF.physical_name,

MF.type_desc AS FileType,

MF.size * 8 / 1024 AS FileSizeMB,

fileproperty(MF.name, 'SpaceUsed') * 8/ 1024 AS UsedSpaceMB,

mf.name LogicalName

FROM

sys.master_files MF

JOIN sys.databases DB ON DB.database_id = MF.database_id;

SELECT * FROM sys.database_files;

Look at the autoshrinking option

select name, is_auto_shrink_on from sys.databases;

From the GUI.

Also likelihood reindexing, large batch jobs and database shrinking. Those do cause transaction logs to grow and shrink.

Also might be DBA performing shrinkfile.

BACKUP LOG VCDB_LOG TO DISK = 'E:\SQLbackup.bak'

GO

DBCC SHRINKFILE (N'VCDB_LOG' , 10)

GO

Impact on Oracle if ESX Host went down.

Here are collection of scenarios if ESX hosts gone down.

Symptom - vmwarning and storagerm logs will spit out tons of the following messages.

Some host is down, need to reset the slot allocation

Number of hosts has changed to 3

Number of hosts has changed to 8

Number of hosts has changed to 7

Some host is down, need to reset the slot allocation

Occasionally, the following would show up. This is when the the storage is impacted.

: NFSLock: 2208: File is being locked by a consumer on host

As any Oracle DBA already can guess, the Oracle instance will crash and all depends on underlying disk availability. If the disks are down, ASM diskgroup will go down with it.

Alert log might show some or more of the following.

ORA-63999: data file suffered media failure

ORA-01114: IO error writing block to file 20 (block # 392072)

ORA-01110: data file 20: '+ASMDATA/st

ORA-15080: synchronous I/O operation failed to read block 0 of disk 7 in disk group DATA

ORA-27072: File I/O error

Linux-x86_64 Error: 5: Input/output error

Additional information: 4

Additional information: 24576

Additional information: 4294967295

NOTE: cache initiating offline of disk 7 group DATA

What can you do about it? If it's single instance, not much. Upon bringing up the ESX Host, it should restore the services (most of the time), otherwise, DBA might need to restore and recover from RMAN. If it's a RAC setup (RAC on multi-hosts), perhaps check the SCSI Controller's SCSI Bus Sharing options is set to NONE, things should failover to the node that residing in the good host.

Symptom - vmwarning and storagerm logs will spit out tons of the following messages.

Some host is down, need to reset the slot allocation

Number of hosts has changed to 3

Number of hosts has changed to 8

Number of hosts has changed to 7

Some host is down, need to reset the slot allocation

Occasionally, the following would show up. This is when the the storage is impacted.

: NFSLock: 2208: File is being locked by a consumer on host

As any Oracle DBA already can guess, the Oracle instance will crash and all depends on underlying disk availability. If the disks are down, ASM diskgroup will go down with it.

Alert log might show some or more of the following.

ORA-63999: data file suffered media failure

ORA-01114: IO error writing block to file 20 (block # 392072)

ORA-01110: data file 20: '+ASMDATA/st

ORA-15080: synchronous I/O operation failed to read block 0 of disk 7 in disk group DATA

ORA-27072: File I/O error

Linux-x86_64 Error: 5: Input/output error

Additional information: 4

Additional information: 24576

Additional information: 4294967295

NOTE: cache initiating offline of disk 7 group DATA

What can you do about it? If it's single instance, not much. Upon bringing up the ESX Host, it should restore the services (most of the time), otherwise, DBA might need to restore and recover from RMAN. If it's a RAC setup (RAC on multi-hosts), perhaps check the SCSI Controller's SCSI Bus Sharing options is set to NONE, things should failover to the node that residing in the good host.

Wednesday, January 13, 2016

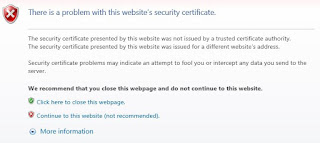

Oracle: Unable to access to Oracle 12C EM outside of a VAPP or VM.

I have seen user struggles with this for along period of time. Hence, I am posting this and hopefully, it helps in nailing the issue faster. However, not all Linux will need to address this. I do not recalled default installation of Oracle 12C on Red Hat needs to address this at all only Centos. I could be wrong.

Inside the Oracle Database VM.

https://<ip address>:5500/em

https://<ip address>:5500/em

Outside of the VM, unable to connect. Pretty obvious, this is an indication that there are some sort of firewalls issues either from the VM itself or the host layer if the VM can be ping-ed and ssh-ed into.

In the VM check the firewalls HTTPS and ports.

Accessible now.

Tuesday, November 24, 2015

How to connect to a Workstation VM with putty.

Connecting to local VMware Workstation with ssh is the same as connecting to any Linux residing in ESX host but simpler since everything is local.

Within the VMware Workstation, locate the IP address

Type the ip into putty. The IP usually start with 192.168.x.x as it is local.

That's it.

Cloudera Hadoop namenode refused to stay up.

Installed Hadoop from Cloudera into Oracle Virtualbox. I have the same deployment in Mac and other windows boxes and never had an issue. But this one did.

[cloudera@quickstart ~]$ hadoop

fs -ls

ls: Call From quickstart.cloudera/10.0.2.15 to quickstart.cloudera:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode status

Hadoop namenode is dead and pid file exists [FAILED]

[cloudera@quickstart ~]$ service status

status: unrecognized service

[cloudera@quickstart ~]$ uname -a

Linux quickstart.cloudera 2.6.32-358.el6.x86_64 #1 SMP Fri Feb 22 00:31:26 UTC 2013 x86_64 x86_64 x86_64 GNU/Linux

[cloudera@quickstart ~]$ service hadoop-hdfs-quickstart.cloudera status

hadoop-hdfs-quickstart.cloudera: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namemode status

hadoop-hdfs-namemode: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode status

Hadoop namenode is dead and pid file exists [FAILED]

[cloudera@quickstart ~]$ service hadoop-hdfs-quickstart.cloudera status

hadoop-hdfs-quickstart.cloudera: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode start

Error: root user required

[cloudera@quickstart ~]$ sudo service hadoop-hdfs-namenode start

starting namenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-namenode-quickstart.cloudera.out

Started Hadoop namenode: [ OK ]

[cloudera@quickstart ~]$

Not really sure what's going on. ..as soon as I started up namenode, it goes back down in less than 30 seconds. This was fixed when I redeployed. I have the same installation on other more powerful machines and all worked just fine. I suspect this issue has something to with low spec from the machine I was using.

ls: Call From quickstart.cloudera/10.0.2.15 to quickstart.cloudera:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode status

Hadoop namenode is dead and pid file exists [FAILED]

[cloudera@quickstart ~]$ service status

status: unrecognized service

[cloudera@quickstart ~]$ uname -a

Linux quickstart.cloudera 2.6.32-358.el6.x86_64 #1 SMP Fri Feb 22 00:31:26 UTC 2013 x86_64 x86_64 x86_64 GNU/Linux

[cloudera@quickstart ~]$ service hadoop-hdfs-quickstart.cloudera status

hadoop-hdfs-quickstart.cloudera: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namemode status

hadoop-hdfs-namemode: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode status

Hadoop namenode is dead and pid file exists [FAILED]

[cloudera@quickstart ~]$ service hadoop-hdfs-quickstart.cloudera status

hadoop-hdfs-quickstart.cloudera: unrecognized service

[cloudera@quickstart ~]$ service hadoop-hdfs-namenode start

Error: root user required

[cloudera@quickstart ~]$ sudo service hadoop-hdfs-namenode start

starting namenode, logging to /var/log/hadoop-hdfs/hadoop-hdfs-namenode-quickstart.cloudera.out

Started Hadoop namenode: [ OK ]

[cloudera@quickstart ~]$

Not really sure what's going on. ..as soon as I started up namenode, it goes back down in less than 30 seconds. This was fixed when I redeployed. I have the same installation on other more powerful machines and all worked just fine. I suspect this issue has something to with low spec from the machine I was using.

Monday, November 16, 2015

vCenter 6.0 upgrade failed.

In some cases, upgrading vCenter 5.5 to 6.0 could encounter the following errors during pre-upgrade check. That is due to artifacts been left behind since vCenter 2.5 and got carried over from version to version.

[HY000](20000) [Oracle][ODBC][Ora]ORA-20000: ERROR ! Missing constraints:

VPX_DEVICE.VPX_DEVICE_P1,VPX_DATASTORE.FK_VPX_DS_DC_REF_VPX_ENT;

ORA-06512: at line 260

Create the constraints. Use at your own risk!

alter table VPX_DEVICE add constraint VPX_DEVICE_P1 primary key (DEVICE_ID) using index VPX_DEVICE_P1;

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTITY to FK_VPX_DS_DC_REF_VPX_ENT

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTI to FK_VPX_DS_REF_VPX_ENTITY

Another variant of issue is, the unique index do not even existed. Once can simply try to drop and recreate it.

drop index VPX_DEVICE_P1;

create unique index VPX_DEVICE_P1 ON VPX_DEVICE(DEVICE_ID);

alter table VPX_DEVICE add constraint VPX_DEVICE_P1 primary key (DEVICE_ID) using index VPX_DEVICE_P1;

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTITY to FK_VPX_DS_DC_REF_VPX_ENT

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTI to FK_VPX_DS_REF_VPX_ENTITY

[HY000](20000) [Oracle][ODBC][Ora]ORA-20000: ERROR ! Missing constraints:

VPX_DEVICE.VPX_DEVICE_P1,VPX_DATASTORE.FK_VPX_DS_DC_REF_VPX_ENT;

ORA-06512: at line 260

Create the constraints. Use at your own risk!

alter table VPX_DEVICE add constraint VPX_DEVICE_P1 primary key (DEVICE_ID) using index VPX_DEVICE_P1;

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTITY to FK_VPX_DS_DC_REF_VPX_ENT

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTI to FK_VPX_DS_REF_VPX_ENTITY

Another variant of issue is, the unique index do not even existed. Once can simply try to drop and recreate it.

drop index VPX_DEVICE_P1;

create unique index VPX_DEVICE_P1 ON VPX_DEVICE(DEVICE_ID);

alter table VPX_DEVICE add constraint VPX_DEVICE_P1 primary key (DEVICE_ID) using index VPX_DEVICE_P1;

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTITY to FK_VPX_DS_DC_REF_VPX_ENT

alter table VPX_DATASTORE rename constraint FK_VPX_DS_REF_VPX_ENTI to FK_VPX_DS_REF_VPX_ENTITY

Subscribe to:

Posts (Atom)